With all that’s been written about analytics, fault detection, automated diagnostics, and continuous commissioning, the truth is that the technology alone does not save money. It’s not about the technology - it’s about people, processes and implementation. Technology is merely the enabler. Analytics is a tool, not the remedy. Think of it this way - you don’t feel well so you go to the doctor and the doctor orders an MRI. The MRI costs money. However, the MRI does not cure your ailment; it simply gives the doctor insight as to what is wrong. The cure could be relatively simple (low cost) in the form of a prescription, or it could be major (high cost) in the form of surgery. Either of these remedies (corrective actions) will cost money in order to fix your ailment. The MRI is analytics; you need to spend money on it but by itself, it will not save you money.

Similar to the MRI, meaningful bottom-line results come from the implementation of recommendations identified by evaluating analytics. The change is a result of people and processes. While it’s true that you need good technology, technology is only an enabler of the solution; it is not the solution in itself.

Case in point, at a recent conference, there was a dizzying array of analytic products that shared similar features and all claimed to do the same thing. However, what seemed to be missing in all of the discussions was the importance of people and process in order to achieve a successful outcome. Further, there seemed to be a pervasive notion that analytics saves money. As stated, analytics can only provide insight into the actions necessary to save money.

The following are samples of key points that have little to do with technology, but are critical to a successful result.

Budget

How much money is allocated toward corrective action and preventative maintenance? This may seem obvious, but all the money spent on analytics won’t return a penny to the bottom line if the issues identified are not actually addressed. If an organization’s maintenance strategy is “run to failure,” it is virtually impossible for any analytics solution to achieve the desired results. The solution should be to identify and address the issue PRIOR TO failure. Sufficient money and resources must be allocated to correct the issues identified by the solution.

Process or Work Flow

Corrective action needs to be implemented in the context of a clearly defined process. The key to a successful strategy, one that is aligned with an organization’s budget and resources, is to build a process around the technology. The process manages the rate at which corrective action is taken to reflect that which the organization can effectively support. Identifying more issues than can be realistically addressed only serves to frustrate stakeholders and undermine support for the solution. The ultimate solution is built around a process that effectively triages, prioritizes, and ultimately, corrects the issues that have the greatest impact on the organization’s bottom line without placing an undue burden on its budget and resources.

We often hear that more data is better; more rules are better and so on. The truth is more is not always better if it does not align with the organization’s ability to process it, or return value sufficient to offset the cost to obtain it.

Prioritization and Cost Impact

There are a number of methods for prioritizing the issues identified by an analytics solution:

- Frequency – How many times did the fault occur?

- Duration – How long did the fault condition last?

- Cost impact – How much did the fault cost? Or, how much could the organization have saved if the fault was corrected? This is typically expressed in per hour units.

- Level of importance of the area served by the equipment associated with the fault.

Frequency and duration are easily understood and easy to quantify. However, cost impact is another story. There is very little quantifiable data available to measure cost impact; there are just too many variables that influence cost impact value. For example, consider an economizer failure. To have an accurate cost impact value requires consideration (at a minimum) of the following:

- Equipment age and condition

- Climate zone

- Hours of operation

- Energy cost

- Type of occupancy

For a single site implementation, this may not seem too difficult and would only need to be tailored for each asset to which this rule applies. However, when implementing this rule across hundreds of sites, the effort required to capture and input this data is a formidable task. As such, an approach that is effective in these situations is to apply some level of generalization. This provides a reasonable level of accuracy for the amount of time required to set up the data. Is it completely accurate for each piece of equipment? No. However, keep in mind that we are using this to prioritize corrective actions, not to predict absolute cost avoidance. Regardless of the method chosen, it should be something that is mutually accepted by the service provider and the customer. In this way, actions and results are transparent and understood by all stakeholders.

Corrective Action

Once prioritized, how are corrective actions implemented? Typically, they’ll fall into one of three buckets: immediate action, scheduled, or an aggregate. Immediate action items are those that are both urgent and important. Scheduled items are those that are important, but not necessarily urgent. And, other corrective actions can be aggregated together to form more strategic improvement projects. Regardless of the approach, it is imperative that the corrective action process keep pace with the issues that are identified.

Think of the process as a lens. In its initial deployment, we expect to find many issues. The rules provide a broad view of the portfolio, buildings, and assets. As issues are identified, prioritized, and corrected focus becomes more concentrated. Simply put, focus moves from the low hanging fruit to searching for “change in the couch”. If the process fails to correct the obvious, the change in the couch will never be found and efforts will be stymied. This is one of the primary purposes of the process; to “throttle” the amount of issues to a level that is consistent with that which the organization can handle from a staffing and/or budget availability standpoint.

The budget must provide for both the analytics solution and corrective action. It is rather straightforward to establish a budget for implementing the analytics solution. However, consideration must also be given to budgeting for continuous corrective action and the level of “throttling” that needs to take place to make the overall process sustainable. Identifying more than can be corrected is of little value and could ultimately be counter-productive to the effort.

Current Condition

What is the current condition of the equipment and is there an active program for maintenance and improvements? Once the analytics solution is run, what is it likely to find? A building or portfolio of buildings whose equipment is well maintained and has a regular replacement cycle in place will likely result in fewer “major” issues discovered by the analytics solution.

However, applying analytics to equipment that has not been properly maintained will likely result in significant findings that will likely include major repairs. This needs to be considered when planning an analytics solution because the findings could be overwhelming. This is not to say that it shouldn’t be done or that equipment that has not been maintained properly is not a good candidate for an analytics solution. Quite the contrary, these are excellent situations for analytics and the opportunity for meaningful results is significant. However, there needs to be a clear understanding that the problem wasn’t created overnight and it won’t be corrected overnight.

Conclusion

Implementing analytics should be done with the understanding that analytics alone is not a discrete project. Rather, it is a continuous process where issues are identified and corrected in a prioritized manner, within the limits of staff and budget. When those tasks are completed, another level of issues are identified and addressed. As each successive layer of issues is resolved, the process narrows its focus and moves to greater levels of detail, which continue to drive greater levels of efficiency and ultimately cost savings. Keep in mind, there is no “silver bullet” to achieve success. It’s an on-going commitment to continuous improvement.

About the Author: Paul Oswald joined CBRE in 2015 as part of CBRE’s acquisition of Environmental Systems, Inc. (ESI), a leading systems integrator and provider of energy management services. Now part of CBRE’s Global Energy & Sustainability (GES) services platform, Mr. Oswald is now the Managing Director of CBRE|ESI. Based in Brookfield, WI, CBRE|ESI assists CBRE clients with identifying and implementing portfolio-wide energy reductions, cost saving and sustainable operating practices within the broader GES service offering. Mr. Oswald leads a team of dedicated professionals and subject matter experts who provide services including strategy and design; system integration; turn-key project delivery; and managed services for HVAC, lighting, security, and energy; and control and metering systems. Mr. Oswald has over 35 years of experience in building automation, system integration and energy management. His experience includes product strategy and development, business and channel development, and service delivery. Prior to acquiring ESI in 2002, he worked at Tridium, Square D, and Invensys (now part of Schneider Electric).

Related Stories

AEC Tech | Aug 24, 2017

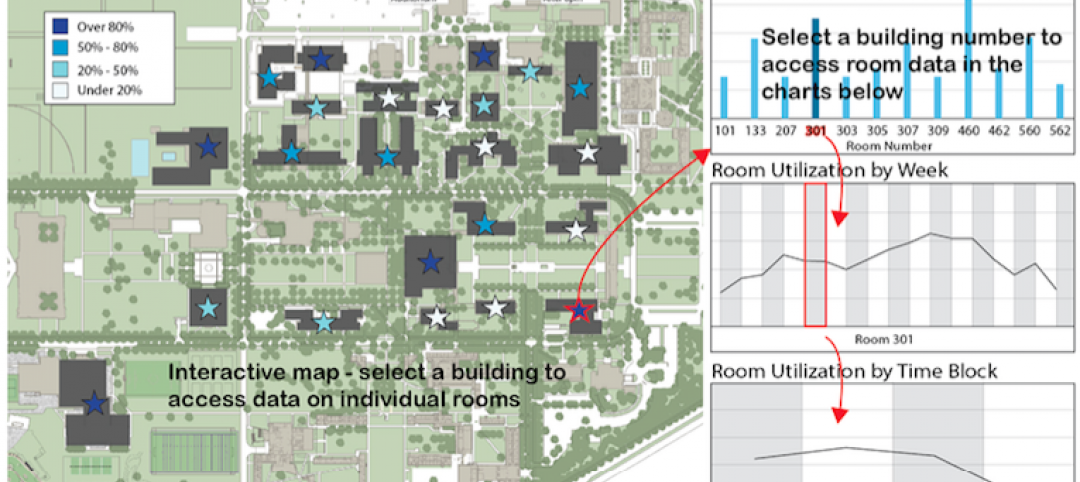

Big Data helps space optimization, but barriers remain

Space optimization is a big issue on many university campuses, as schools face increasing financial constraints, writes Hanbury’s Jimmy Stevens.

| Jun 13, 2017

Accelerate Live! talk: A case for Big Data in construction, Graham Cranston, Simpson Gumpertz & Heger

Graham Cranston shares SGH’s efforts to take hold of its project data using mathematical optimization techniques and information-rich interactive visual graphics.

| May 24, 2017

Accelerate Live! talk: Applying machine learning to building design, Daniel Davis, WeWork

Daniel Davis offers a glimpse into the world at WeWork, and how his team is rethinking workplace design with the help of machine learning tools.

| May 24, 2017

Accelerate Live! talk: Learning from Silicon Valley - Using SaaS to automate AEC, Sean Parham, Aditazz

Sean Parham shares how Aditazz is shaking up the traditional design and construction approaches by applying lessons from the tech world.

| May 24, 2017

Accelerate Live! talk: The data-driven future for AEC, Nathan Miller, Proving Ground

In this 15-minute talk at BD+C’s Accelerate Live! (May 11, 2017, Chicago), Nathan Miller presents his vision of a data-driven future for the business of design.

Big Data | May 24, 2017

Data literacy: Your data-driven advantage starts with your people

All too often, the narrative of what it takes to be ‘data-driven’ focuses on methods for collecting, synthesizing, and visualizing data.

Sustainable Design and Construction | Apr 5, 2017

A new app brings precision to designing a building for higher performance

PlanIt Impact's sustainability scoring is based on myriad government and research data.

AEC Tech | Dec 18, 2016

Customized future weather data now available for online purchase

Simulation tool, developed by Arup and Argos Analytics, is offered to help owners and AEC firms devise resilience strategies for buildings.

Big Data | May 9, 2016

City planners find value in data from Strava, a cyclist tracking app

More than 75 metro areas around the world examine cyclists’ routes and speeds that the app has recorded.

AEC Tech | May 9, 2016

Is the nation’s grand tech boom really an innovation funk?

Despite popular belief, the country is not in a great age of technological and digital innovation, at least when compared to the last great innovation era (1870-1970).