News recently broke about Flux’s pivot away from making tools focused on interoperability between building design tools. The AEC startup recently alerted their customers that their web-based data management platform and desktop tools would be taken offline starting on March 31, 2018. This gives designers just little over a month to find alternatives if they are relying on the platform to develop their projects.

No doubt this is leaving the users of their platform feeling burned – especially those who are using Flux to stitch together critical project workflows. For Flux, it was surely an incredibly difficult decision as they have put a tremendous amount of effort into crafting their current product suite.

This recent event has left me reflecting back on my own experiences with the data interoperability challenges that face the building industry. As I’ve illustrated in previous articles, our industry continues to operate within the walled gardens of proprietary building applications and protected data sources.

However, in recent years we have seen the rise of powerful tools, exchange formats, and workflows. Open source software tools like Rhynamo provide interfaces for making direct file connections between common tools like Rhino and Revit. New tools, like Speckle, are introducing their own flavor of web-based data communication. Free tools, such as Salamander, focus on creating connections between design and FEA structural software such as GSA and ETABS. Open standards, such as IFC, offer ‘platform neutral’ file storage for building information.

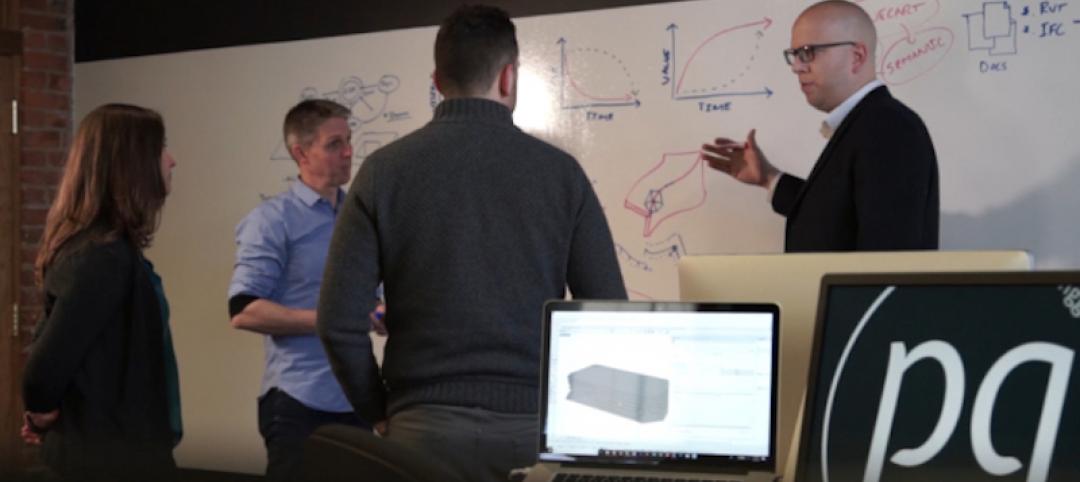

For meeting specific business needs, several progressive businesses have even opted to invest in creating their own ‘home brew’ solutions, such as TTX (which has since evolved into the Konstru product). In Proving Ground’s work, we have created our own extensive codebase called Conveyor and we are actively working with businesses to customize specific workflow solutions.

And yet for all the options available, to focus solely on the technology would be a red herring: in my experience some of the more challenging problems of interoperability are less about technical limitations present in a user’s software stack: often times larger problems stem from skills gaps, poor project planning, and a lack of trust in data from others.

Bridging Skills Gaps

I have found that very few training courses on digital design technology address interoperability directly. Most curricula focus chiefly on the operations of the software and less on workflow-related topics such as how data can be used between systems. In years past, this subject matter may have been considered too niche. Today, the coordination between multiple tools is paramount – especially in the cases of large projects with many stakeholders.

Interoperability is also a core concept related to data-driven processes: if it is the ambition of a company to create feedback loops with data from several systems into the design process, it follows that interoperability skills are essential. This means making investments in expert knowledge and training for data manipulation and, yes, even coding.

Planning the Project Workflow

Even in today’s BIM-centric world, It is also not uncommon for a project to operate without a BIM execution plan and if they do, the outlined approach usually lacks the appropriate nuance of specifying workflows that extend beyond primary BIM authoring tools, such as Revit.

Experience shows me that project teams entering into production are often wholly unprepared to deal with the complexity associated with translating and transfering project data. This leads to missed opportunities for efficiency even when the technical workflow solutions are readily available. Even the most sophisticated workflow technology cannot replace the need for a knowledgeable team operating within a clear production plan.

Building Trust in Data

Ultimately the benefits of data interoperability among the larger team hinge on one factor that is also the most challenging to establish: trust. Competing value systems and cantankerous project relationships are abundant in the construction industry. Liability concerns can keep useful digital assets locked away from other participants. In some instances, it is often the preference of the receiving party to discard and rebuild entire models. The reason: They outright assume the data is incorrect.

The result: restarts, rework, and data loss.

Establishing trusted relationships among a team in relation to data takes time and a track record. This is complicated by the construction industry’s tendency to reassemble new teams project-to-project. However, even with newly formed teams, I believe it is important to address data workflows and accountability early on in the process. For example, it is still uncommon for us to see robust digital data uses and transfer protocols defined in project contracts – even with the availability of resources such as the AIA’s Digital Practice documents.

Implementation is Fundamental

Today, the technical challenges of interoperability remain a complicated issue although disconnects continue to be bridged with new tools. However, I continue to observe that the limiting factor of industry interoperability comes down to more fundamental questions related to implementation: growth of data literacy, improved project workflow planning, and cultivating trust in data are all critical to realizing the potential of industry interoperability and a data-driven transformation.

More from Author

Nathan Miller | Apr 27, 2020

Computers are hard

Sure – computers today look nicer, the experience using them has improved, and we can do exponentially more with them following Moore’s law. But my central observation remains: computers are still hard to use.

Nathan Miller | Nov 26, 2019

Free Generative Design – A brief overview of tools created by the Grasshopper community

For over 12 years, a digital design community made up of professionals, researchers, and academics sprung up around Grasshopper.

Nathan Miller | Nov 11, 2019

Are you creating a culture that is toxic to innovation?

Why are good designers, talented technology experts, and architects leaving practice? Why won’t they stay?

Nathan Miller | May 14, 2018

4 tactics for our digital transformation

While our technology is becoming more advanced, the fundamental processes at the core of design and construction businesses have largely remained unchanged for decades.

Nathan Miller | Dec 22, 2016

The success of your data strategy depends on healthy business practices

Data and digital tools are an absolute given to today’s building design and construction process. But creating a true data-driven workflow requires more than just a solid strategy, writes Proving Ground’s Nathan Miller.

Nathan Miller | Aug 22, 2016

The wicked problem of interoperability

Building professionals are often put in a situation where solving problems with the ‘best tool for the job’ comes at the cost of not being able to fully leverage data downstream without limitation, writes Proving Ground's Nathan Miller.