If all goes as planned, Canada’s third-largest telecommunications company, Telus, next month will open what has been billed as one of the most energy-efficient data centers in the world. The $75 million, 215,000-sf facility, located in Kamloops, B.C., is projected to use up to 80% less power and 86% less water than a typical data center of its size. Its peak power utilization effectiveness (PUE)—the ratio of total energy used by the data center to the energy delivered to the computing equipment—is estimated at a minuscule 1.15.

The “secret sauce,” according to the facility’s contractor, Skanska, is a patented cooling technology, called eOPTI-TRAX, that replaces the traditional chiller plant. Together with its modular data center partner, Inertech, Skanska supplied the distributed, closed-loop system, which greatly expands the temperature range at which a facility can utilize outdoor air for “free” cooling. Telus’ Kamloops Internet Data Centre, for instance, will be able to use 100% outside air for cooling when temperatures are as high as 85°F—a huge improvement over the 45°F threshold typical with traditional chiller plant setups.

In lieu of underfloor air distribution, which requires numerous fans to push cold air toward the racks to cool the servers, the eOPTI-TRAX approach uses a contained hot- and cold-aisle design with optimized air circulation in the server aisles and liquid refrigerant coils lining the inside of the rear walls of the server rack to draw and absorb the heat. The scheme reduces hot-aisle temperatures from 160°F to just 75°F, according to Skanska.

The Telus project is among a handful of recently completed data centers that are raising the bar for energy and water efficiency. Building Teams are employing a range of creative solutions—from evaporative cooling to novel hot/cold-aisle configurations to heat recovery schemes—in an effort to slash energy and water demand. In addition, a growing number of data center developers are building facilities in cool, dry locations to take advantage of 100% outdoor air for cooling.

The National Center for Atmospheric Research’s new $70 million, 153,000-sf Wyoming Supercomputing Center in Cheyenne, for instance, uses the cool, dry air in combination with evaporative cooling towers to chill the supercomputers 96% of the year. Even when factoring the facility’s administrative offices, the building’s ultimate PUE is projected to be 1.10 or less, placing it in the top 1% of energy-efficient data centers worldwide. Where possible, the data center reuses waste heat for conditioning the office spaces and for melting snow and ice on the walkways and loading docks. Chilled beams provide efficient cooling in the administrative areas.

Facebook’s new model for data centers

While a temperate climate can be hugely advantageous for data center owners, it’s not a requirement for achieving a low PUE and WUE (water usage effectiveness), say data center design experts. Building Teams and technology providers continue to develop schemes that can operate efficiently at higher temperatures and relative humidity levels.

Take Facebook’s data center in Forest City, N.C., for example. Unlike the company’s other data center locations in Prineville, Ore., and Lulea, Sweden, the Forest City facility sits in a warm, humid climate—yet it has been able to achieve a PUE on par with the other installations, at 1.07, according to Daniel Lee, PE, Data Center Design Engineer at Facebook.

“Last summer, we had the second-hottest summer on record in Forest City and we didn’t have to use our DX system (direct expansion coils) system,” says Lee. “Although it was hot, with highs of 103°F, the relative humidity was low enough so that we could use the water (evaporative cooling) to cool the space.”

The trick, says Lee, is a simplified, holistic approach to data center design that optimizes not only the building mechanical systems, but also the computer hardware (servers and racks) and software applications—all with an eye toward reliability and energy efficiency. Traditional mechanical components—UFAD, chillers, cooling towers, etc.—are replaced with a highly efficient evaporative cooling scheme that uses 100% airside economization and hot-aisle containment. An open-rack server setup with exposed motherboards greatly reduces the energy required to cool the equipment. The result is a facility with fewer moving parts to break down that can operate efficiently at interior temperatures in excess of 85°F.

Facebook made waves in 2011 when it made public its design specs for its first in-house data center, in Prineville, under a program called the Open Compute Project. Modeled after open-source software communities, the program relies on crowd sourcing to share and improve on Facebook’s base data center scheme.

“We give the design away; you can take it and build it yourself,” says Chuck Goolsbee, Datacenter Site Manager at Facebook’s Prineville location. “All the components are there to build it in sort of a LEGO-like manner, from the building itself down to the servers.”

Facebook expects big things from the Open Compute Project. Many of the core component suppliers are involved—including Dell, Hewlett-Packard, Intel, and AMD—and thousands of data center experts have participated in engineering workshops and have given feedback to the group.

“Fifteen years from now, the DNA from Open Compute will be in every data center in the world,” says Goolsbee.

Related Stories

Sponsored | BIM and Information Technology | Oct 15, 2018

3D scanning data provides solutions for challenging tilt-up panel casino project

At the top of the list of challenges for the Sandia project was that the building’s walls were being constructed entirely of tilt-up panels, complicating the ability to locate rebar in event future sleeves or penetrations would need to be created.

BIM and Information Technology | Aug 16, 2018

Say 'Hello' to erudite machines

Machine learning represents a new frontier in the AEC industry that will help designers create buildings that are more efficient than ever before.

BIM and Information Technology | Aug 16, 2018

McKinsey: When it comes to AI adoption, construction should look to other industries for lessons

According to a McKinsey & Company report, only the travel and tourism and professional services sectors have a lower percentage of firms adopting one or more AI technologies at scale or in a core part of their business.

BIM and Information Technology | Jul 30, 2018

Artificial intelligence is not just hysteria

AI practitioners are primarily seeing very pointed benefits within problems that directly impact the bottom line.

AEC Tech | Jul 24, 2018

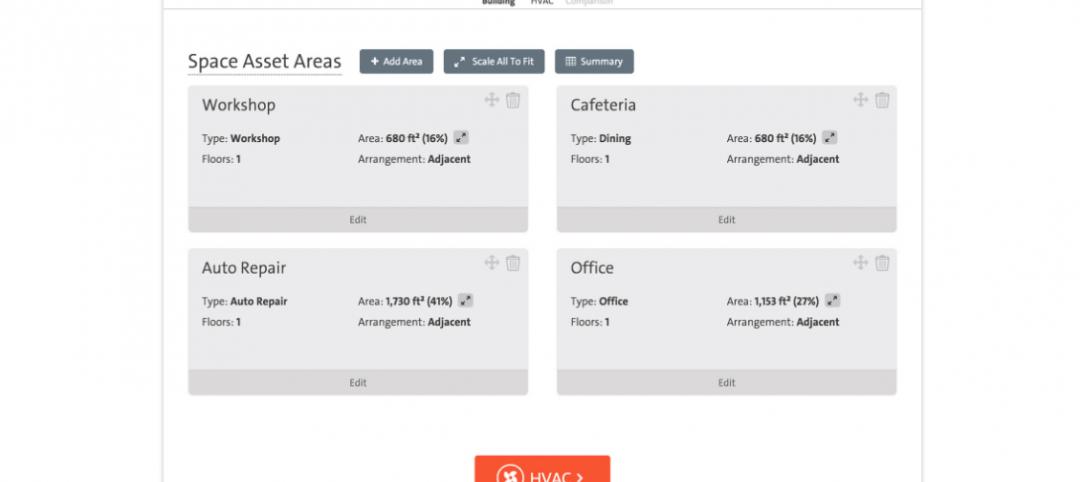

Weidt Group’s Net Energy Optimizer now available as software as a service

The proprietary energy analysis tool is open for use by the public.

Accelerate Live! | Jul 17, 2018

Call for speakers: Accelerate AEC! innovation conference, May 2019

This high-energy forum will deliver 20 game-changing business and technology innovations from the Giants of the AEC market.

BIM and Information Technology | Jul 9, 2018

Healthcare and the reality of artificial intelligence

Regardless of improved accuracy gains, caregivers may struggle with the idea of a computer logic qualifying decisions that have for decades relied heavily on instinct and medical intuition.

BIM and Information Technology | Jul 2, 2018

Data, Dynamo, and design iteration

We’re well into the digital era of architecture which favors processes that have a better innovation cycle.

Accelerate Live! | Jun 24, 2018

Watch all 19 Accelerate Live! talks on demand

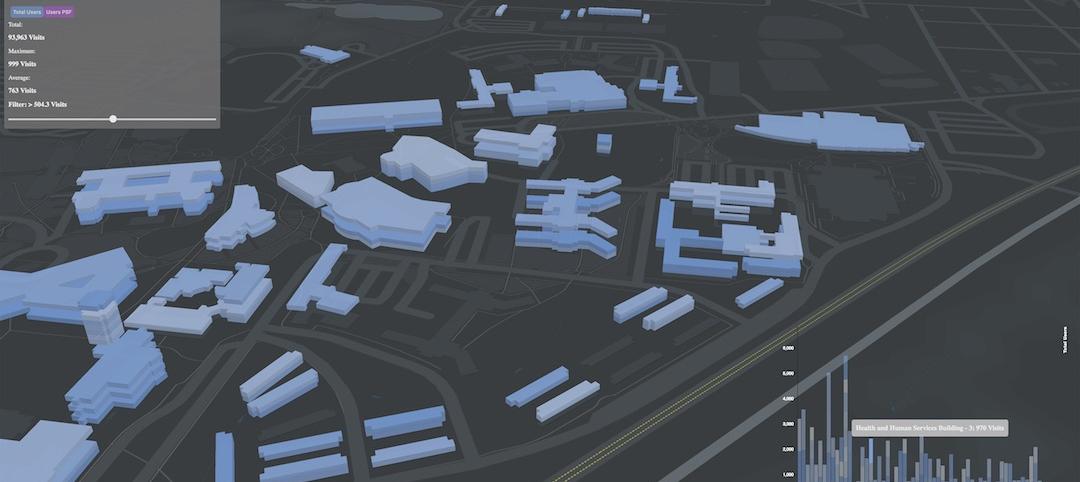

BD+C’s second annual Accelerate Live! AEC innovation conference (May 10, 2018, Chicago) featured talks on AI for construction scheduling, regenerative design, the micro-buildings movement, post-occupancy evaluation, predictive visual data analytics, digital fabrication, and more. Take in all 19 talks on demand.

BIM and Information Technology | Jun 12, 2018

Machine learning takes on college dropouts

Many schools use predictive analytics to help reduce freshman attrition rates.