Artificial intelligence isn’t just about T-800s and Skynet or best known as the boogeyman that haunted Stephen Hawking’s dreams. AI, and everything included under that broad umbrella, is already at work every day to make our lives easier. Machine learning, although not as exciting as other forms of AI, is one of the most helpful and ubiquitous AI applications in use.

Many people think artificial intelligence and machine learning are synonymous with one another, but that isn’t the case. Where AI is a broad term denoting the entire concept of erudite machines, machine learning is a specific application of AI more closely related to data mining or statistics. It is a subset of AI that takes data, feeds it to a computer program, and then learns from that data to create a model and, ultimately, a prediction.

See Also: McKinsey: When it comes to AI adoption, construction should look to other industries for lessons

But what exactly does learning mean in this context? According to a widely quoted definition from Tom Mitchell, a professor in the Machine Learning Department in the School of Computer Science at Carnegie Mellon University, “A computer program is said to learn from experience ‘E’ with respect to some class of tasks ‘T’ and performance measure ‘P’ if its performance at tasks in ‘T,’ as measured by ‘P,’ improves with experience ‘E.’” In short, a computer program is said to have learned if it can improve the outcome of a specific task based on the past experience it has been provided with.

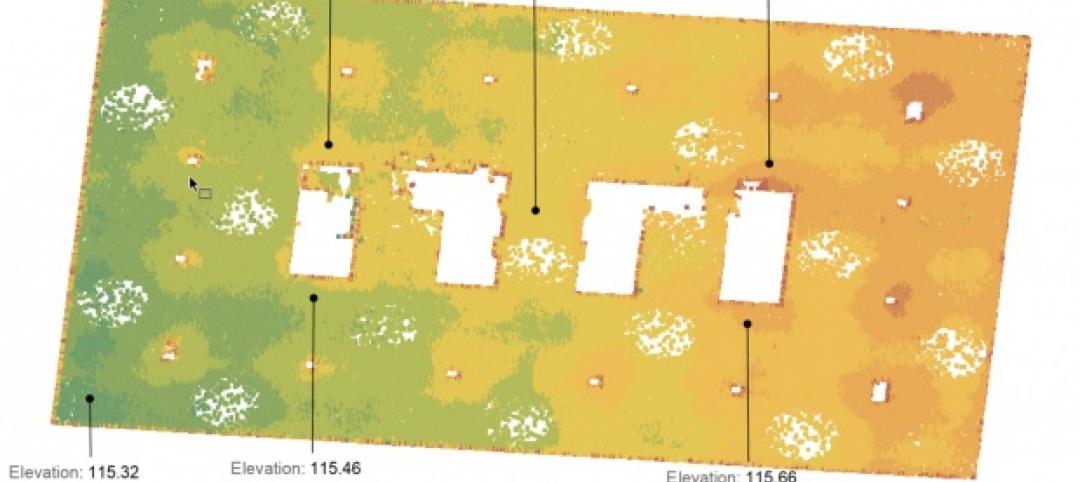

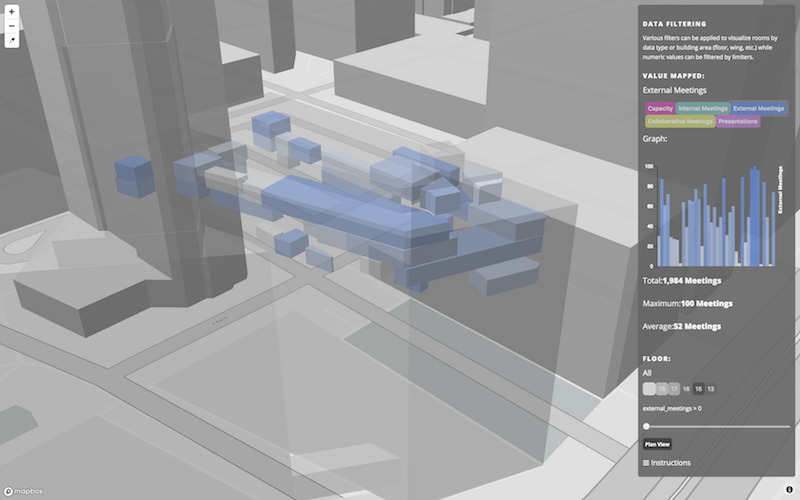

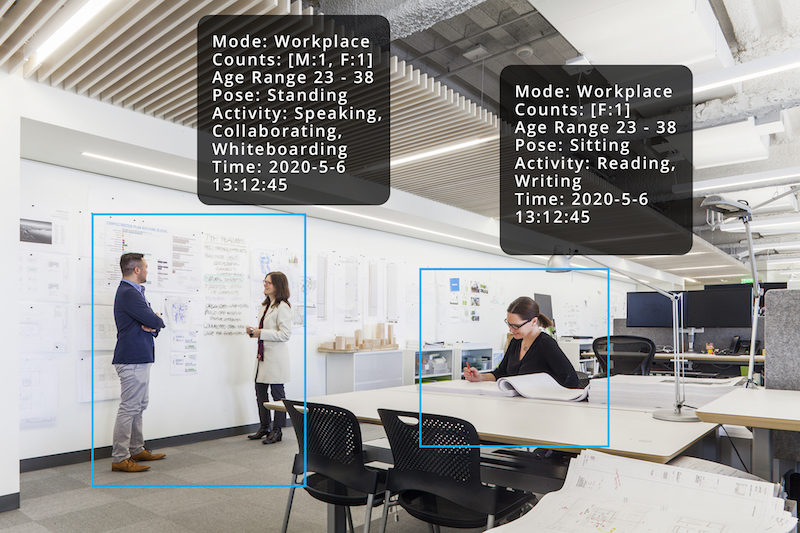

Data streams are crucial to success with machine learning. Here, Perkins+Will created space utilization data visualizations to track and learn from how building occupants use spaces in a building or campus.

Data streams are crucial to success with machine learning. Here, Perkins+Will created space utilization data visualizations to track and learn from how building occupants use spaces in a building or campus.

Machine Learning in Architecture

At WeWork, a company that specializes in creating co-working and office spaces, machine learning is being used to better determine the correct amount of meeting rooms in a given office building to avoid the stressors for employees associated with there being too few meeting rooms, or the inefficiency of wasted space associated with there being too many.

WeWork uses data about the floor plan of an office and about how past office spaces they have worked on are actually used by employees to train a machine learning system known as a neural network.

The network processes the data it is shown over and over and begins to recognize patterns and learn how employees use the meeting rooms.

After the network has been properly trained, a model is formed. This model is then provided with an input example (e.g., the number of employees or meeting rooms) to create a prediction. It is this prediction that will inform WeWork of the best number of meeting spaces to include in the design and how often they will actually be used.

The more data the neural network is given, the better the model will become and the more accurate the predictions will be. In other words, experience “E” (the data) is improving the performance measure “P” (accuracy of predicted occupancy of the meeting rooms vs. the actual occupancy) of the task “T” (predicting how many meeting rooms are needed based on previously unseen data).

This process creates a feedback loop: buildings provide data that is used to generate a prediction, which informs the design of a more-efficient, better-designed building. That building then provides better data, which will refine the prediction to create an even better building. The machines continue to get smarter and the buildings continue to improve.

WeWork has calculated that machine learning is about 40% more accurate in terms of estimating the predicted occupation of the meeting rooms versus the actual occupation (known as the prediction bias) as compared to human designers. This percentage should only increase as more data is collected and fed into the neural network, and the loop proceeds along its continuous path.

In similar fashion, ZGF Architects is exploring machine learning statistical techniques to build models that identify how specific design elements contribute to overall occupant satisfaction in addition to space use. ZGF uses data it collects on its own, but also incorporates client data from space utilization sensors, GPS tracking, ID badges, and conference room bookings.

“With more data, the more we can zero in on the factors most responsible for, say, why a certain conference room’s characteristics make it more successful than those of another conference room,” says Tim Deak, a Workplace Strategist with ZGF. “We’ll also be able to say, with some confidence, how well we can expect a particular design articulation to fare in a similar project setting.”

The process is a combination of high-tech machine learning applications and good old-fashioned gumshoe intuition.

Perkins+Will’s machine learning efforts focus on computer vision and natural language processing to reach what it refers to as “extended intelligence.” Courtesy Perkins+Will.

Perkins+Will’s machine learning efforts focus on computer vision and natural language processing to reach what it refers to as “extended intelligence.” Courtesy Perkins+Will.

A picture says a thousand words

The ever-important data that fuels machine learning doesn’t just have to be numbers and statistics. Machines can also be trained with images.

ZGF is currently developing a classification tool that will use machine learning to improve how the firm conducts occupancy studies. Before ZGF begins designing a project, the firm evaluates clients’ existing spaces to better understand how they are being used. This data is then used to inform the team of the best ways to optimize the new project so employees will make the best use of their space.

The current process for accomplishing this task is as straightforward and nontechnical as it gets; the design team will walk the floors to observe and manually tally each employee’s furniture and equipment setup.

“For larger client offices, this can take days and multiple people,” says Dane Stokes, Design Technology Specialist with ZGF. “With machine learning, we intend to drastically streamline the process.”

In the new, streamlined process, designers will capture video of the employee workspaces and use it to train machines to automatically classify the items shown. The tool uses Google’s robust photo data set to categorize everything from the number of monitors on an employee’s desk to the specific brand of keyboard or chair used.

If the employee is using an office chair that isn’t in Google’s library, that’s where the beauty of machine learning comes in. “If a client’s preferred brand of office chair isn’t in Google’s library, we will capture 360-degree videos of the chair and train our machines to recognize it,” says Stokes. The next time the machine comes across that chair it will be recognized and categorized immediately. This is referred to as supervised learning. According to Stokes, this process will help save time and money by “vastly reducing the number of human inputs needed, while helping us produce better designs in the future.”

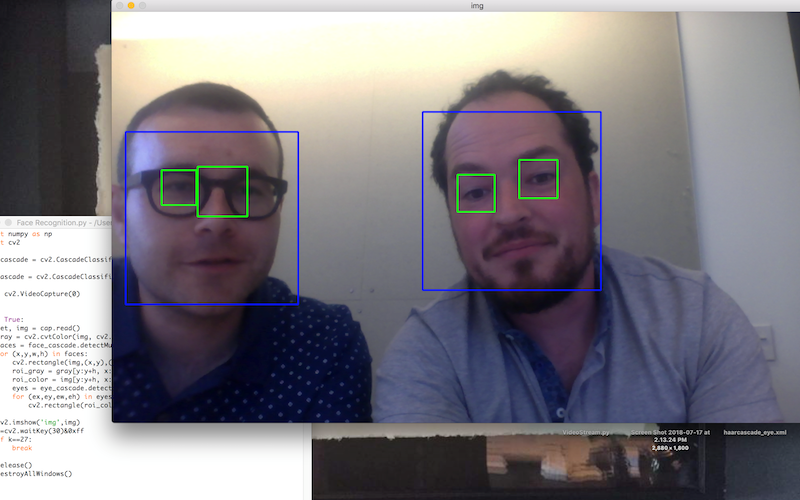

ZGF Workplace Strategist Tim Deak (left) and Design Technology Specialist Dane Stokes (right) share a screenshot of a custom-designed application for facial recognition. The same technology is being used to develop a machine learning tool that will be used to conduct ZGF’s occupancy studies. Courtesy ZGF.

ZGF Workplace Strategist Tim Deak (left) and Design Technology Specialist Dane Stokes (right) share a screenshot of a custom-designed application for facial recognition. The same technology is being used to develop a machine learning tool that will be used to conduct ZGF’s occupancy studies. Courtesy ZGF.

From artificial intelligence to extended intelligence

Most of the current AI and machine learning efforts at Perkins+Will focus on computer vision and natural language processing to reach what it refers to as “extended intelligence,” according to Satya Basu, an Advanced Insights Analyst with P+W.

Computer vision allows graphic output—a massively critical part of the deliverables of the design process—to be rendered into feature-rich, machine-readable content, which is the first step in generating structured data at scale for both supervised and unsupervised learning.

Next, machine learning processes are leveraged for classification and clustering analyses of projects with different features that can be further blended or combined. Natural language processing is also leveraged on the combined output of labels, features, and descriptions to generate intelligent query and suggestion models.

Machine Learning Glossary

Data set. A collection of examples

Example. One row of a data set. An example contains one or more features and possibly a label.

Feature. An input variable used in making predictions

Label. In supervised learning, the “answer” or “result” portion of an example

Model. The representation of what a machine learning system has learned from the training data

Neural network. A model that is composed of layers (at least one of which is hidden) consisting of simple connected units followed by nonlinearities

Prediction. A model’s output when provided with an input example

Prediction bias. A value indicating how far apart the average of predictions is from the average of labels in the data set

Supervised learning. Training a model from input data and its corresponding labels

Training. The process of determining the ideal parameters comprising a model

Unsupervised learning. Training a model to find patterns in a data set, typically an unlabeled data set

Related Stories

BIM and Information Technology | Jun 5, 2015

Backpack becomes industry first in wearable reality capture

Combining five high-dynamic cameras and a LiDAR profiler, Leica's Pegasus:Backpack creates a 3D view indoors or outdoors for engineering or professional documentation creation.

BIM and Information Technology | Jun 4, 2015

Why reality capture is essential for retrofits

Although we rely upon as-built drawings to help us understand the site for our design, their support is as thin as the paper they are printed on, write CASE's Matthew Nelson and Carrie Schulz.

BIM and Information Technology | Jun 3, 2015

More accurate GPS ready to change the way we shop, interact, and explore

New technology reduces location errors from the size of a car to the size of a nickel—a 100 times increase in accuracy. This is a major technological breakthrough that will affect how we interact with environments, the places we shop, and entertainment venues.

Sponsored | BIM and Information Technology | May 28, 2015

Does BIM Work as a Deliverable?

Sasha Reed sits down with industry professionals at the BIMForum in San Diego to talk about BIM technology.

BIM and Information Technology | May 27, 2015

4 projects honored with AIA TAP Innovation Awards for excellence in BIM and project delivery

Morphosis Architects' Emerson College building in Los Angeles and the University of Delaware’s ISE Lab are among the projects honored by AIA for their use of BIM/VDC tools.

BIM and Information Technology | May 26, 2015

Lego-like model building kit was created by an architect for architects

Arckit, as the system is called, was designed to a 1:48 scale, making it easy to create models accurate to the real-life, physical building projected.

BIM and Information Technology | May 26, 2015

Moore's Law and the future of urban design

SmithGroupJJR's Stephen Conschafter, urban designer and planner, discusses his thoughts on the 50th anniversary of Moore's Law and how technology is transforming urban design.

BIM and Information Technology | May 21, 2015

How AEC firms should approach BIM training

CASE Founding Partner Steve Sanderson talks about the current state of software training in the AEC industry and common pitfalls in AEC training.

BIM and Information Technology | May 13, 2015

5 smart tech trends transforming the job site

RFID labor tracking, 360 cameras, and advanced video tools are among the tech innovations that show promise for the commercial construction industry.

BIM and Information Technology | May 10, 2015

How beacons will change architecture

Indoor positioning is right around the corner. Here is why it matters.